Parsing Peta Bytes of Common Crawl Data to find Domains for your Target

Greetings, In this article we will be exploring the ways to identify similar domains that could be attributed to the the company main domain.

When performing a security research for bug bounty or penetration testing purposes it is really important to identify as much as domains for the reverent organization. The domains could be the acquisitions, partners etc. once we identify such domains let’s say redacted.com then there’s a possibility that the same company have other TLDs with .co .net etc. That’s exactly we are going to setup today that will help us to find more attributable assets for the company.

What is Common Crawl?

Common Crawl is a non-profit organization that provides open access to a vast dataset of web crawl data. Their goal is to democratize access to web information by making it freely available to anyone who wants to analyze or utilize it for research, applications, or other purposes.

What is AWS Athena?

Amazon Athena is an interactive query service provided by Amazon Web Services (AWS) that enables users to analyze data stored in Amazon S3 (Simple Storage Service) using standard SQL queries. It is a serverless service, meaning that users do not need to manage any infrastructure or servers to use Athena. Instead, users can simply point Athena to their data stored in S3 and start querying it immediately.

Let’s Start Fun part

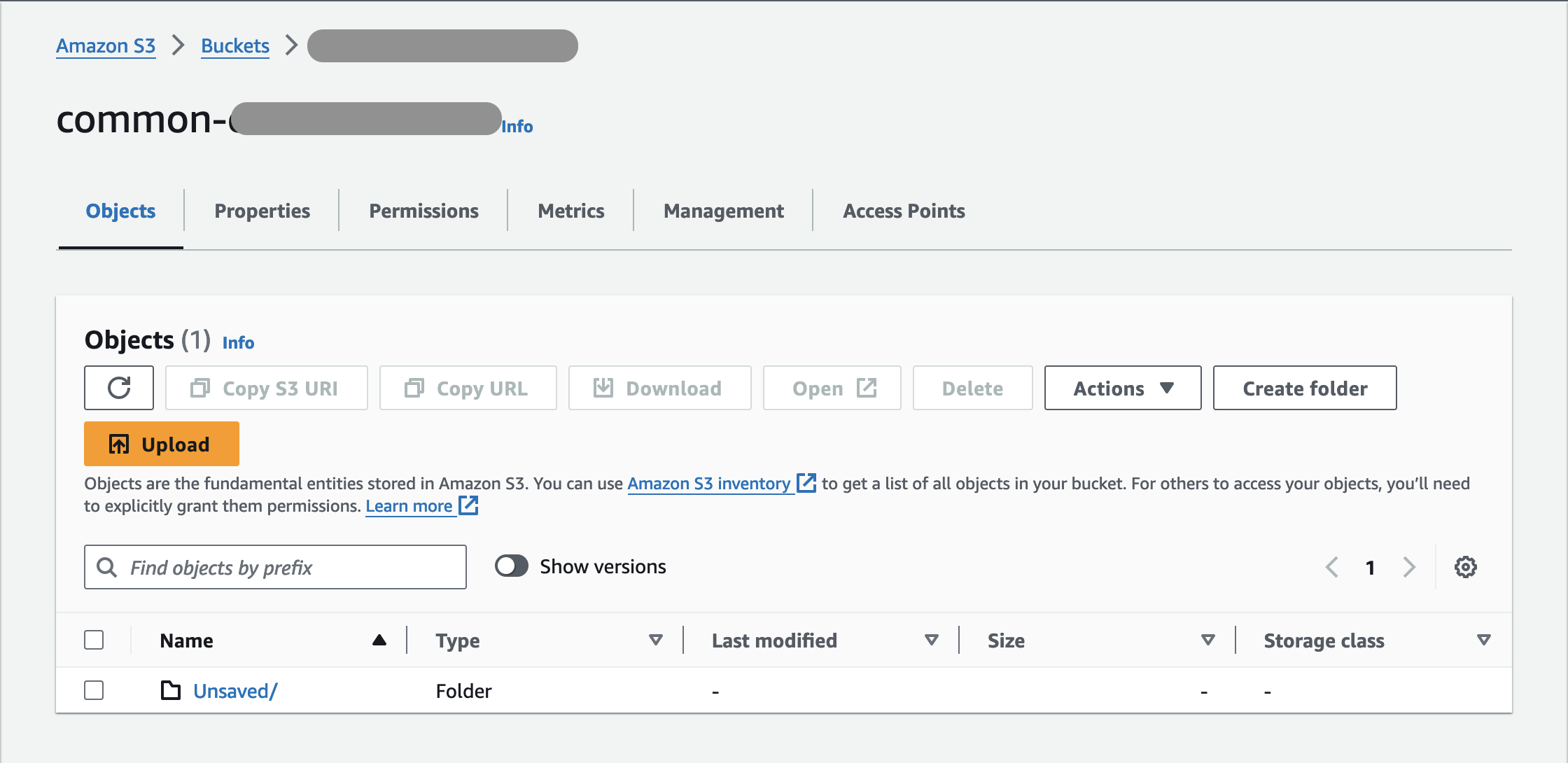

To get this started, first thing we need to do is to create an s3 bucket. Simple go to the S3 services section in AWS and create an S3 bucket. While creating an S3 bucket make sure that your AWS Region should be same as Athena Region.

For this write-up i’ll be using the region ap-south-1

Create Database

Simply navigate to the AWS athena on ap-south-1 region and create the database using the following command:

CREATE DATABASE ccindex

Create Table

Create a new table with the following query

CREATE EXTERNAL TABLE IF NOT EXISTS ccindex (

url_surtkey STRING,

url STRING,

url_host_name STRING,

url_host_tld STRING,

url_host_2nd_last_part STRING,

url_host_3rd_last_part STRING,

url_host_4th_last_part STRING,

url_host_5th_last_part STRING,

url_host_registry_suffix STRING,

url_host_registered_domain STRING,

url_host_private_suffix STRING,

url_host_private_domain STRING,

url_protocol STRING,

url_port INT,

url_path STRING,

url_query STRING,

fetch_time TIMESTAMP,

fetch_status SMALLINT,

content_digest STRING,

content_mime_type STRING,

content_mime_detected STRING,

content_charset STRING,

content_languages STRING,

warc_filename STRING,

warc_record_offset INT,

warc_record_length INT,

warc_segment STRING)

PARTITIONED BY (

crawl STRING,

subset STRING)

STORED AS parquet

LOCATION 's3://commoncrawl/cc-index/table/cc-main/warc/';

This will map the data from the common crawl datasets using their S3 bucket to your defined database in AWS Athena and the data will be stored in our s3 bucket.

Match Table

MSCK REPAIR TABLE ccindex

Whenever a new index is released you have to run this command to update the existing database to the latest datasets.

Common crawl indexes can be found here: https://index.commoncrawl.org/collinfo.json

AWS CLI

We will be querying the the common crawl data set using AWS CLI. Make sure you have installed AWS CLI and configured your AWS security credentials using aws configure command. While configuring AWS security credentials make sure you use the same region as you used on AWS Athena and S3 bucket. I will be using ap-south-1 as I have used the same for both S3 and Athena.

Finding Top Level domains ( TLDs )

So we will be using the below coded script to identify top level domains:

#!/bin/bash

domain=$1

s3_bucket_URI="s3://<bucket_name>/Unsaved/athena-cli-results"

echo -e "[+] Executing AWS Athena Command..."

csv_name=$(aws athena start-query-execution --query-string "SELECT DISTINCT(url_host_name) FROM \"ccindex\".\"ccindex\" WHERE crawl = 'CC-MAIN-2023-50' AND subset = 'warc' AND url_host_name LIKE '%$domain%'" --query-execution-context Database=ccindex --result-configuration OutputLocation=$s3_bucket_URI --output text)

echo -e "[+] Sleeping for 10 seconds ..."

sleep 10

echo -e "[+] Fetched Results: ${csv_name}.csv"

echo -e "[+] Downloading file from S3 bucket"

aws s3 cp "$s3_bucket_URI/${csv_name}.csv" "."

echo "[-] Removing file from S3 bucket"

aws s3 rm "$s3_bucket_URI/$csv_name.csv"

aws s3 rm "$s3_bucket_URI/$csv_name.csv.metadata"

cat $csv_name.csv

you can change the bucket URI as per the name you have created. Save the bash script and run as bash [script.sh](http://script.sh) riotgames . The script will print out all the domains from common crawl data set that contains riot games.

As we can see there are some TLDs in the results and of course we have to manually check them if they really belongs to the relevant company.

if you have any questions reachout @Linkedin